- It is a Big Data streaming platform and event ingestion service, capable of receiving and processing millions of events per second.

- It can process and store events, data, or telemetry produced by distributed software and devices.

- Data sent to an event hub can be transformed and stored using any real-time analytics provider or batching/storage adapters.

- Event Hubs represents the "front door" for an event pipeline, often called an event ingestor in solution architectures.

- An event ingestor is a service that sits between event publishers and event consumers to decouple the production of an event stream from the consumption.

Concepts

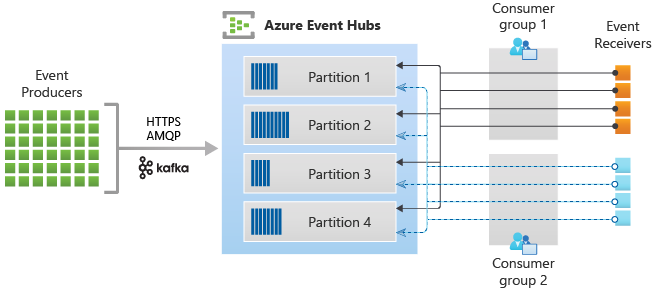

- Event Producers: Any entity that sends data to an event hub.

- Event publishers can publish events using HTTPS or AMQP 1.0 or Apache Kafka.

- Partitions: Consumers only reads a specific subset (partition) of the message stream.

- Consumer Groups: A view (state, position, or offset) of an entire event hub.

- Consumer groups enable multiple consuming applications to each have a separate view of the event stream, and to read the stream independently.

- Throughput Units: Units of capacity that control the throughput of Event Hubs.

- Event Receivers: Any entity that reads event data from an event hub.

- All Event Hubs consumers connect via the AMQP 1.0 session.

- Events are delivered through the session as they become available.

Common Scenarios

- Anomaly detection (fraud/outliers)

- Application logging

- Analytics pipelines, such as clickstreams

- Live dashboarding

- Archiving data

- Transaction processing

- User telemetry processing

- Device telemetry streaming